AI's Dark Side: Meta Struggles to Block Viral Deepfake Celebrity Explicit Images on Facebook

Celebrities

2025-02-17 17:27:58Content

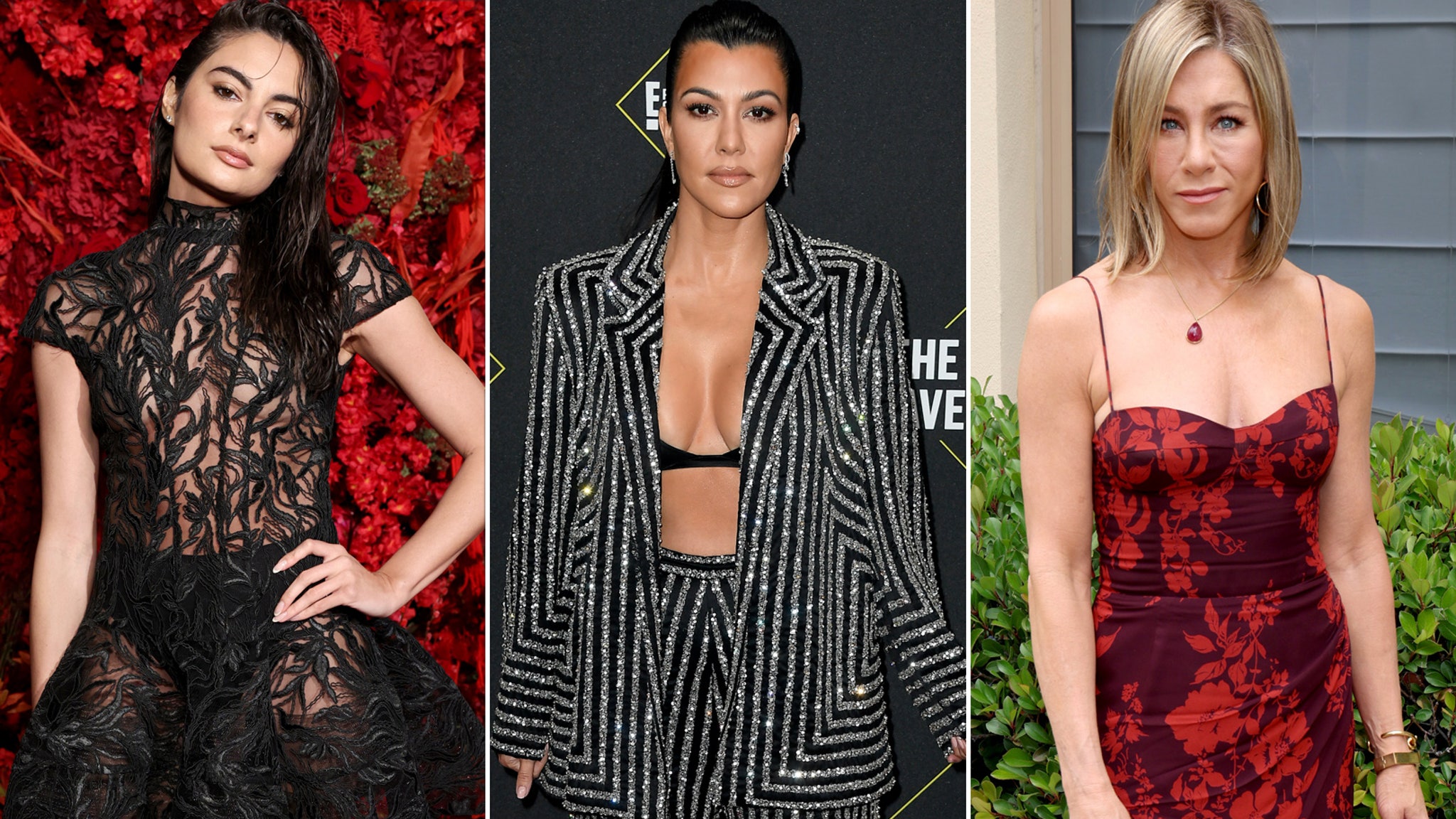

Meta is facing significant challenges in controlling the rapid proliferation of AI-generated, sexually explicit deepfake images targeting high-profile celebrities like Miranda Cosgrove and Scarlett Johansson across its Facebook platform. The tech giant appears to be struggling to effectively monitor and remove these unauthorized and harmful synthetic images that are spreading like wildfire through user networks.

The deepfake phenomenon has raised serious concerns about digital privacy, consent, and the potential misuse of artificial intelligence technology to create non-consensual, manipulated visual content. Celebrities are particularly vulnerable to these malicious digital attacks, which can cause significant emotional distress and reputational damage.

Despite Meta's existing content moderation policies, the sheer volume and sophisticated nature of these AI-generated images are overwhelming the platform's current detection and removal mechanisms. This ongoing issue highlights the urgent need for more advanced technological solutions to combat the spread of harmful synthetic media.

The incident underscores the growing challenges social media platforms face in protecting individuals from digital harassment and maintaining the integrity of online spaces in an era of rapidly evolving artificial intelligence technologies.

Digital Deception: The Alarming Rise of AI-Generated Celebrity Deepfakes on Social Media

In the rapidly evolving digital landscape, a disturbing trend has emerged that threatens the personal integrity and privacy of public figures. The proliferation of artificial intelligence-powered deepfake technology has reached unprecedented levels, with social media platforms struggling to contain the spread of manipulated and sexually explicit synthetic imagery targeting unsuspecting celebrities.Unmasking the Dark Side of Digital Manipulation

The Technological Nightmare of Synthetic Media

The advent of advanced artificial intelligence has ushered in a terrifying new era of digital manipulation. Machine learning algorithms have become increasingly sophisticated, enabling bad actors to generate hyper-realistic images that blur the lines between reality and fabrication. Celebrities like Miranda Cosgrove and Scarlett Johansson have found themselves unwilling victims of this technological invasion, with their likenesses being weaponized without consent. Sophisticated neural networks can now generate images so convincing that they are nearly indistinguishable from authentic photographs. This technological capability represents a profound ethical challenge, exposing significant vulnerabilities in digital content verification and personal image protection.Platform Vulnerabilities and Inadequate Responses

Social media giants like Meta have demonstrated a shocking inability to effectively combat the rapid proliferation of deepfake content. Despite investing billions in content moderation technologies, platforms remain overwhelmed by the sheer volume and complexity of synthetic media generation. The current content moderation systems rely on reactive approaches, which are fundamentally inadequate against rapidly evolving AI-generated imagery. Machine learning algorithms used by these platforms struggle to distinguish between authentic and fabricated visual content, creating a dangerous ecosystem where malicious actors can easily exploit technological limitations.Psychological and Professional Implications for Targeted Individuals

The psychological trauma experienced by celebrities subjected to non-consensual synthetic imagery cannot be overstated. Beyond the immediate violation of personal boundaries, these deepfakes can cause substantial professional and personal damage, potentially destroying carefully cultivated public personas and causing significant emotional distress. Victims often find themselves trapped in a nightmarish scenario where their digital identity can be weaponized and distributed globally within moments. The lack of robust legal frameworks and technological countermeasures leaves these individuals virtually defenseless against such malicious digital attacks.Emerging Technologies and Potential Solutions

Researchers and technology companies are developing increasingly sophisticated detection mechanisms to combat deepfake proliferation. Advanced cryptographic techniques, blockchain-based verification systems, and machine learning models trained to identify synthetic imagery represent promising avenues for addressing this complex challenge. Digital watermarking, AI-powered forensic analysis, and collaborative cross-platform detection networks are emerging as potential strategies to mitigate the spread of malicious synthetic media. However, these solutions remain in nascent stages, requiring significant investment and interdisciplinary collaboration.Ethical and Societal Implications

The deepfake phenomenon represents more than a technological challenge—it is a profound ethical crisis that challenges fundamental concepts of consent, identity, and digital rights. As artificial intelligence continues to advance, society must develop comprehensive legal and technological frameworks to protect individuals from unauthorized digital manipulation. The current landscape demands urgent action from policymakers, technology companies, and digital rights advocates to establish robust mechanisms that safeguard personal digital identities and prevent the weaponization of synthetic media technologies.RELATED NEWS

Celebrities

Glitz, Glamour, and Jaw-Dropping Fashion: The Brit Awards 2025 Red Carpet Moments That Stole the Show

2025-03-02 07:58:00

Celebrities

Sparkling Sensation: J.Lo's Dazzling Diamond Ring Steals the Spotlight After Mansion Move

2025-02-23 15:18:43

Celebrities

Tension on Stage: Shakira's Controversial Moment with Backup Dancer Sparks Viral Debate

2025-02-27 19:54:41