Viral Deepfake Deception: Celebrities' Anti-Ye Stance Exposed as AI Fabrication

Celebrities

2025-02-12 22:59:58Content

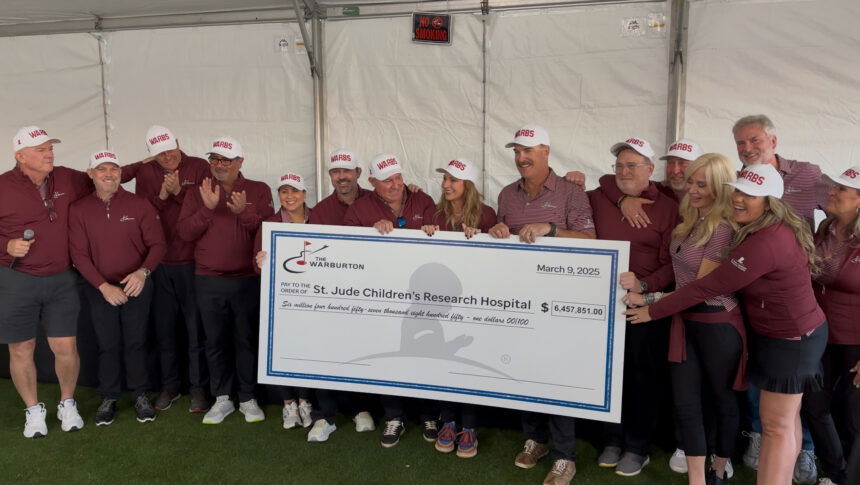

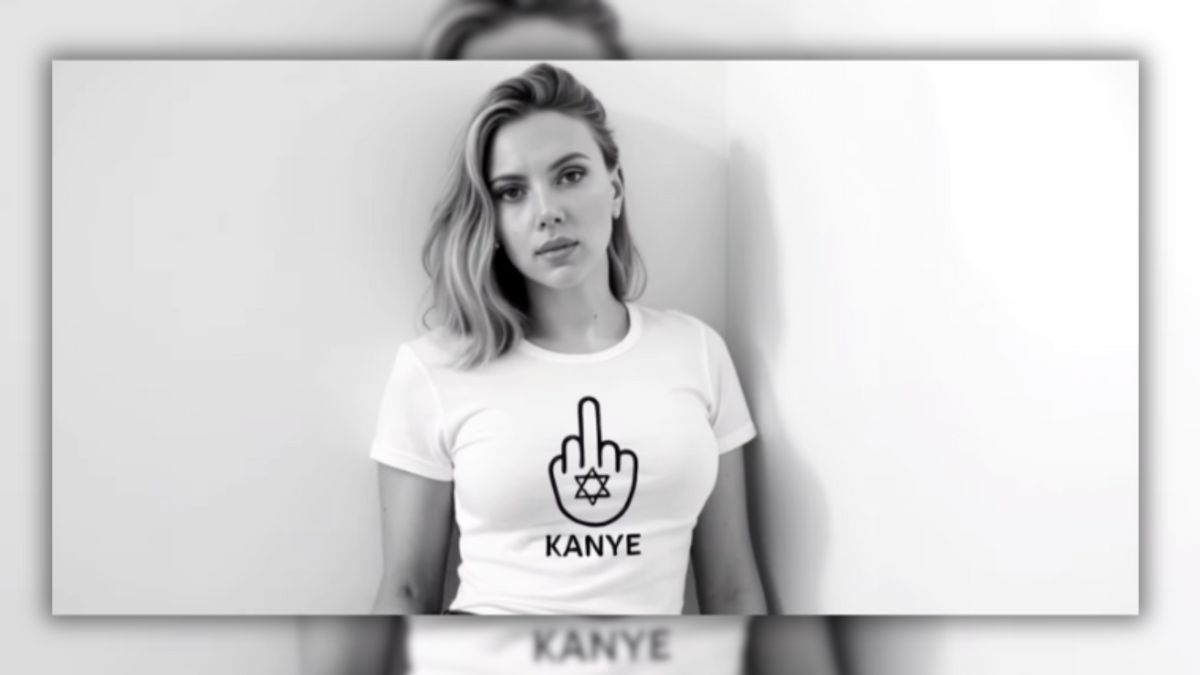

A viral social media video has sparked widespread attention by seemingly depicting Hollywood stars taking a united stand against controversial rapper Kanye West. The video, which quickly circulated online, appeared to show high-profile celebrities like Scarlett Johansson and Jerry Seinfeld wearing protest T-shirts targeting West's recent inflammatory statements and actions.

The unexpected visual statement from these prominent entertainment figures suggests a growing industry-wide pushback against West's increasingly polarizing public persona. While the authenticity of the video remains unverified, it has already generated significant buzz and conversation across social media platforms.

Fans and followers have been quick to share and comment on the footage, with many expressing support for what they perceive as celebrities using their platform to address problematic behavior. The video highlights the ongoing tension between artistic expression and social responsibility in the entertainment world.

As the story continues to develop, audiences remain curious about the potential implications of such a public statement from well-known personalities in the entertainment industry.

Digital Deception: When Celebrity Activism Meets Artificial Intelligence

In the rapidly evolving landscape of digital media and artificial intelligence, the boundaries between reality and fabrication have become increasingly blurred, challenging our perception of truth and authenticity in the age of technological manipulation.Unraveling the Complex Web of Digital Misinformation and Celebrity Representation

The Rise of AI-Generated Celebrity Narratives

The emergence of sophisticated artificial intelligence technologies has dramatically transformed the way visual content is created and consumed. Advanced machine learning algorithms now possess the capability to generate hyper-realistic images and videos that can convincingly mimic real-world scenarios, blurring the lines between authentic documentation and digital fabrication. These technological developments have profound implications for media consumption, challenging traditional notions of visual verification and credibility. Researchers and digital forensics experts have increasingly highlighted the potential dangers of such technologies, emphasizing the need for robust verification mechanisms. The ability to generate seemingly authentic visual representations of celebrities engaging in specific activities or expressing particular sentiments represents a significant ethical and technological challenge.Psychological Impact of Digital Manipulation

The proliferation of AI-generated content introduces complex psychological dynamics that fundamentally alter audience perception and engagement. When viewers encounter seemingly authentic representations of well-known personalities, their cognitive processes struggle to distinguish between genuine and fabricated imagery. This phenomenon creates a profound sense of uncertainty and erodes trust in traditional media channels. Psychological studies suggest that repeated exposure to manipulated content can gradually desensitize individuals, potentially undermining their critical thinking capabilities. The emotional and cognitive dissonance generated by such technological interventions can have far-reaching consequences for individual and collective understanding of media representation.Technological Mechanisms Behind Visual Fabrication

Contemporary AI systems leverage advanced neural networks and deep learning algorithms to generate remarkably convincing visual content. These sophisticated technologies analyze extensive datasets of celebrity imagery, learning intricate facial characteristics, movement patterns, and contextual nuances. By synthesizing this information, artificial intelligence can produce images and videos that appear indistinguishable from authentic recordings. The technical complexity underlying these generative processes involves multiple layers of machine learning, including generative adversarial networks (GANs) that continuously refine visual output through iterative computational processes. This technological ecosystem represents a significant leap forward in digital content creation, presenting both unprecedented opportunities and substantial ethical challenges.Legal and Ethical Considerations

The emergence of AI-driven content generation has prompted intense legal and ethical debates surrounding intellectual property rights, personal image representation, and consent. Celebrities and public figures find themselves increasingly vulnerable to unauthorized digital representations that can potentially damage their professional reputation or personal brand. Legal frameworks are struggling to keep pace with technological advancements, creating a complex regulatory landscape that requires nuanced approaches to digital content governance. Policymakers and technology experts are collaborating to develop comprehensive strategies that balance technological innovation with individual privacy protections.Future Implications and Societal Adaptation

As artificial intelligence continues to evolve, society must develop sophisticated mechanisms for critical media consumption. Educational initiatives focusing on digital literacy and technological awareness will become increasingly crucial in empowering individuals to navigate this complex technological landscape. The ongoing dialogue between technological innovation, ethical considerations, and societal adaptation will shape our collective understanding of digital representation in the years to come. Maintaining a balanced perspective that acknowledges both the transformative potential and potential risks of AI-generated content will be paramount.RELATED NEWS

Celebrities

Hollywood Mourns: Co-Stars and Celebrities Pay Tribute to Legendary Actress's Unexpected Passing

2025-02-27 15:47:37

Celebrities

Culinary Stars Serve Up Creativity: Local Celebrities Transform Kids' Art into Delicious Fundraiser

2025-02-12 17:17:35